Example: Using Time Zone Offset for Traffic Node Selection in an Atlas Cluster

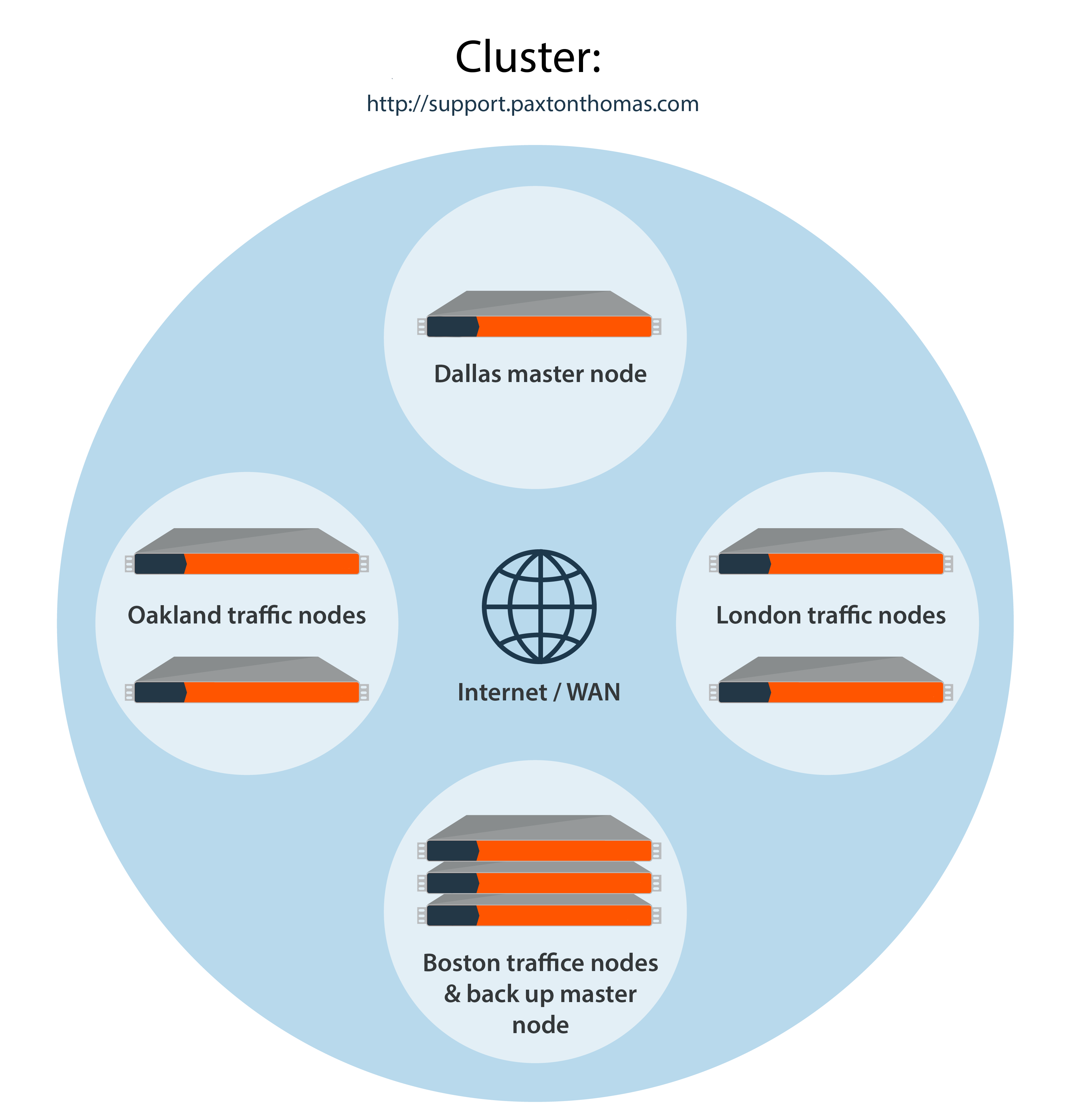

An example of a BeyondTrust clustered deployment that uses time zone offset traffic node selection is the Paxton Thomas support organization, http://support.paxtonthomas.com. Representatives are located in different geographic locations: Boston, Oakland, and London. Paxton Thomas has datacenters in Dallas, Oakland, Boston, and London. Paxton Thomas chooses the Dallas datacenter as the location for the primary node based on its available resources, such as rack space, adequate power and cooling, sufficient bandwidth, and its central location.

Primary Node Selection and Setup

Paxton Thomas chooses a B300 B Series Appliance to serve as their primary node, as they will have less than 300 concurrent logged in representatives at one given time. Paxton Thomas’s failover strategy is to place a second B300 in its Boston datacenter to serve as the backup primary node for the cluster. Therefore, if there is a total outage in the Dallas datacenter, operations can fail over to the backup primary located in Boston. Since the primary and backup B Series Appliances reside on different network segments, Paxton will be required to use either the DNS or NAT swing approach as part of its failover process.

Traffic Node Considerations

After deciding where the primary and backup nodes will reside, Paxton Thomas then examines where the majority of the customers being supported are located. After reviewing historical trends of closed support tickets, it is evident that a majority of the sessions are confined to either the east or west coast, with the remaining sessions located in either the central US or in Europe.

Based on this information, Paxton Thomas decides to place two traffic nodes in the Oakland data center, two traffic nodes in the Boston data center, and finally two nodes in the London data center. Each traffic node is assigned a unique hostname, and the time zone offset for each respective B Series Appliance is set according to its physical location. Once the traffic nodes are deployed, configured, and have joined the cluster, the setup is complete.

Node Selection in Action

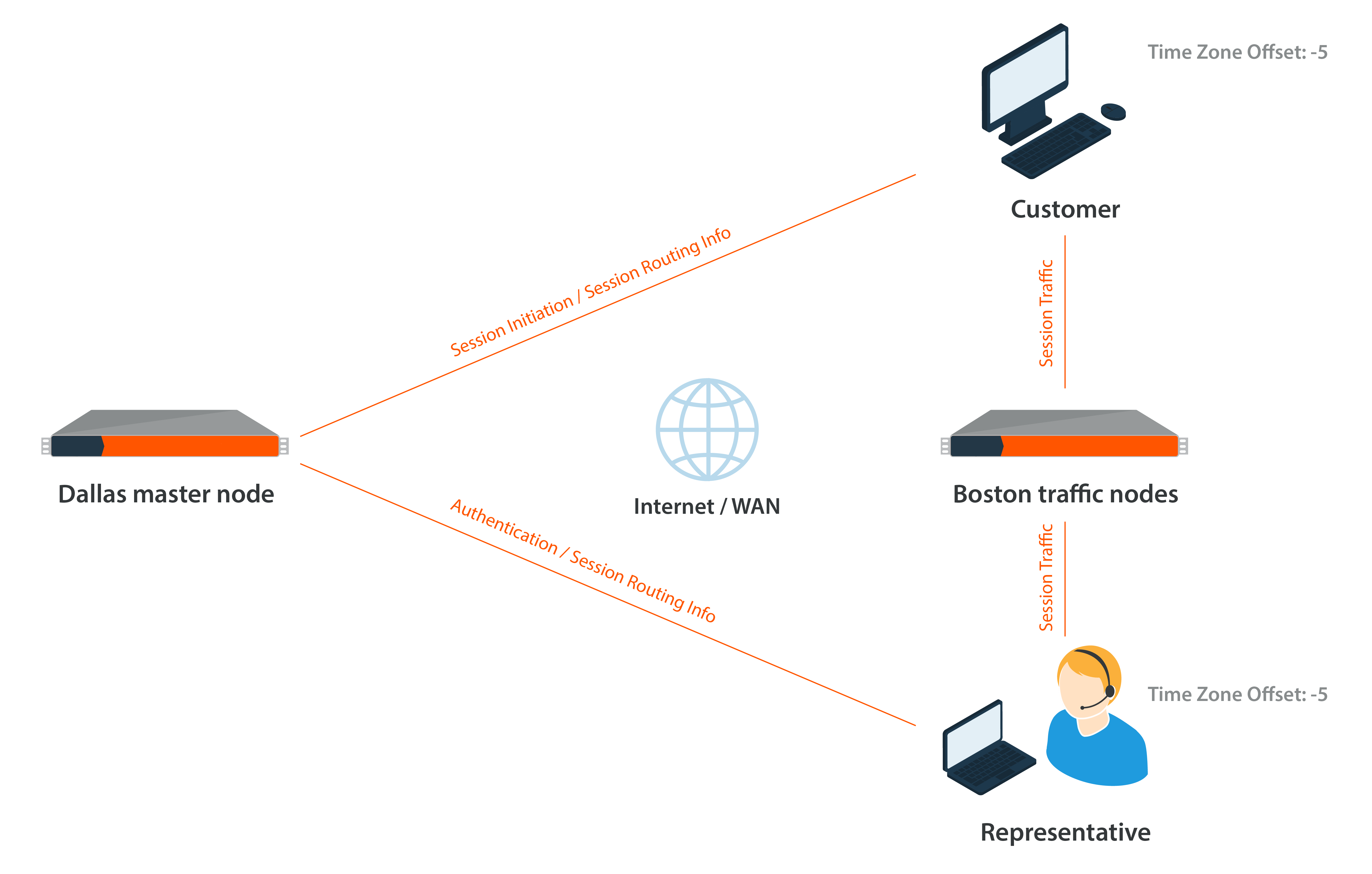

With the clustered configuration completed, the Paxton Thomas support organization is now ready to take support sessions. A Paxton Thomas support representative logs into the representative console (authentication is taking place against the primary), and the console detects that the time zone offset on the representative’s computer is -5, which means this representative is in the eastern time zone and, for this example, is based out of the Boston office. The primary tells the representative console to connect to one of the two traffic nodes in the Boston datacenter using the hostname for one of the two traffic nodes. Both of these nodes have the same time zone offset setting as the representative (-5), so the primary directs the representative to the less busy node.

The logged in representative now receives a call from a customer in Atlanta. The representative emails a session key to the customer, taking them to the http://support.paxtonthomas.com site, which resides on the primary node B Series Appliance in Dallas. Prior to the installation of the customer client, the time zone offset of the customer is determined (in this scenario, it is -5). The primary node determines that the closest traffic node for this specific customer is one of the two traffic nodes in the Boston datacenter, as they also have a -5 time zone offset. Therefore, the primary node chooses one of the two Boston traffic nodes from which to download the customer client, the client connects to that traffic node, and the session is initiated.

In this scenario, the representative has a connection to the primary node and the designated traffic node in Boston. The customer client has a connection to the primary B Series Appliance in Dallas as well as connection to a traffic node in Boston. Throughout the session, the primary node coordinates the traffic between the traffic nodes being used to conduct the session. The bulk of the session traffic takes place at the traffic node level, such as screen sharing and file transfer, while the connections from both the representative and the customer to the primary contain small pieces of information that coordinate the actions between traffic nodes and create the session log. The video of the session recording resides on the traffic node that the customer client is connected to. In this example, the video recording resides on the traffic node in Boston.